Aside from the humorous reminder of days gone by, this post is a recap of my experiences extracting cherry blossom and temperature data from the Japanese Meteorological Agency(JMA).

Background

I’ve wanted to go back to visit Japan since I first went there several years ago. One time of year that I would like to visit is during the cherry blossom season in spring. Because of this, using it as a basis for a data science project sounded like fun.

The first task I tackled was downloading and processing the cherry blossom dates. The Japanese Meteorological Agency’s site has pages for the initial date of bloom and the date of peak bloom. While processing and translating the data I introduced rate limiting on the requests I was making to Google Translate.

A brief translation example

def batch_translation(df, column_src, batch_size=100):

idx = 0

while idx < df[column_src].size:

# Spawn a new translator session to see if that gets past the 429 code from Google.

translator = Translator()

translator.raise_Exception = True

df.loc[idx:idx+batch_size,column_src] = df.loc[idx:idx+batch_size,column_src].apply(translator.translate, src='ja').apply(getattr, args=('text',))

idx = idx+batch_size

print(f"Current index: {idx} of {df[column_src].size}")

time.sleep(10)A couple things to note here:

- I split up the entries into batch sizes of one hundred. This is approximately equivalent to one hundred words as the primary entries being translated were city names.

- There was a ten second pause between each batch.

- I had to recreate an instance of the translator.

In general, the first two items should be pretty universal. If you’re rate limiting you should restrict the number of items in each request by splitting them into batches. After each batch there should be a reasonable pause. The third item was a troubleshooting step. I’m not super happy with the solution (one shouldn’t need to re-instantiate the translator); however, it ended up working so I left it in.

For anyone interested, I uploaded the data I gathered and translated over at Kaggle.

Limiting temperature data requests

Extracting the temperature data has been a much more involved process than it was with the cherry blossom dates, which were available as text tables across several pages. The temperature data was available in CSV, but only from a form on a page that I had to reverse engineer.

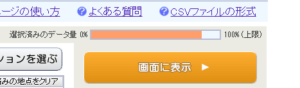

Once I finished reverse engineering the form’s POST request, I was able to download the data in a CSV. JMA implemented a maximum data limit (Fig 1) to protect their server from getting overloaded. After trying a couple of different year ranges, I determined that 25 years for a single city(or site) was within the limits they set. I used that used as a basis to split up the entire time range into a series of batches

def batch_site_download(station_number, start_year = 1955, end_year = 2021, debug=False):

max_years = 25

site_df = pd.DataFrame()

for year in range(start_year, end_year, max_years):

cur_site_df = download_site_data(station_number,year,

year+max_years if year+max_years<=end_year else end_year, debug)

site_df = pd.concat([site_df,cur_site_df])

site_df = site_df[~site_df.index.duplicated()]

sleep(5)

return(site_df)

def multiple_site_download(sites = site_list.index):

city_dicts = {}

for city in sites:

city_dicts[city] = batch_site_download(site_list.loc[city,'Site Id'],debug=True)

print("Saving CSV", flush=True)

city_dicts[city].to_csv('Temp Data/'+city+'.csv')

print("Sleeping 70s to prevent overloading server")

for i in range(70,0,-1):

print(f"{i}", end="\r", flush=True)

sleep(1)A couple of notes on this snippet of code:

download_site_data: This is a function that encapsulates the requests to the JMA server and stores the CSV into a pandas data frame. It also does some error checking on the response from the server. If we get a non CSV response it prints out an error message with any relevant information from the page that JMA returned.

Error example:

HTML Error Page Received: メニューページに戻り、もう一度やり直してください。

This message roughly translates as “Please return to the menu page and try again.” I found that this message indicated that no data for that site was found.

site_list: This is a pandas data frame that I compiled from JMA’s site master list. JMA uses an alphanumeric identifier for each site. For example, for Kyoto it is s47759. Unfortunately the site master file only contained the last three (numeric) characters, so I had to make an assumption about the character prefixes they used. From my initial testing I found that s47 was the most common prefix for large cities (Kyoto, Osaka, Tokyo, etc.), so I used that as the prefix. Thus far it has been working remarkably well.

The most significant rate limiting in the code above is the seventy second countdown between each city. I initially selected a set of five cities to work with for testing, with a thirty second wait between each city. However, after a couple of cities I noticed I started getting error messages indicating that the server was unable to retrieve the data. Since I was able to retrieve them individually I assumed that it was a temporary error. I gradually increased the limit until I reached seventy seconds, at which point those five cities ran without error.

After completing that section I ran the full set of sites, which took several hours. I filled in any missing or incomplete data by comparing the full site list with the amount of data I had downloaded.

Conclusions

First and foremost, err on the side of being overly cautious with someone else’s resources. Assumptions about someone else’s site may not be correct. For instance, I had assumed that Google wouldn’t limit the amount of requests a single person could send them (barring extreme circumstances). However, that was clearly not the case. Also, a thirty second pause initially seemed like a very reasonable wait time between the requests I sent to JMA. However, after trying it I realized that it clearly wasn’t long enough.

Small tests sets are a critical part of any testing process, as it’s easier to see if something is going wrong with a small test than using your full data set. This is even more critical when interfacing with other peoples resources, so that you don’t overload them or waste your own time by having to redo work if your requests get blocked.

In short like the title says, be kind. Think mindfully about the impact your data gathering has before sending that request off into the aether.